- (Exam Topic 3)

You have an Azure data factory named ADM that contains a pipeline named Pipelwe1 Pipeline! must execute every 30 minutes with a 15-minute offset.

Vou need to create a trigger for Pipehne1. The trigger must meet the following requirements:

• Backfill data from the beginning of the day to the current time.

• If Pipeline1 fairs, ensure that the pipeline can re-execute within the same 30-mmute period.

• Ensure that only one concurrent pipeline execution can occur.

• Minimize de4velopment and configuration effort Which type of trigger should you create?

Correct Answer:

A

- (Exam Topic 3)

You plan to use an Apache Spark pool in Azure Synapse Analytics to load data to an Azure Data Lake Storage Gen2 account.

You need to recommend which file format to use to store the data in the Data Lake Storage account. The solution must meet the following requirements:

• Column names and data types must be defined within the files loaded to the Data Lake Storage account.

• Data must be accessible by using queries from an Azure Synapse Analytics serverless SQL pool.

• Partition elimination must be supported without having to specify a specific partition. What should you recommend?

Correct Answer:

D

- (Exam Topic 3)

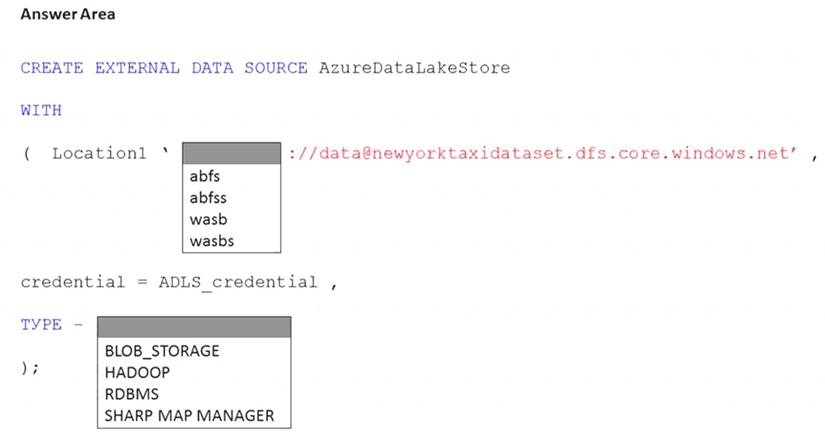

You have an Azure subscription that contains an Azure Synapse Analytics dedicated SQL pool named Pool1 and an Azure Data Lake Storage account named storage1. Storage1 requires secure transfers.

You need to create an external data source in Pool1 that will be used to read .orc files in storage1. How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Solution:

Graphical user interface, text, application, email Description automatically generated

Reference:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-external-data-source-transact-sql?view=azure-sqldw

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

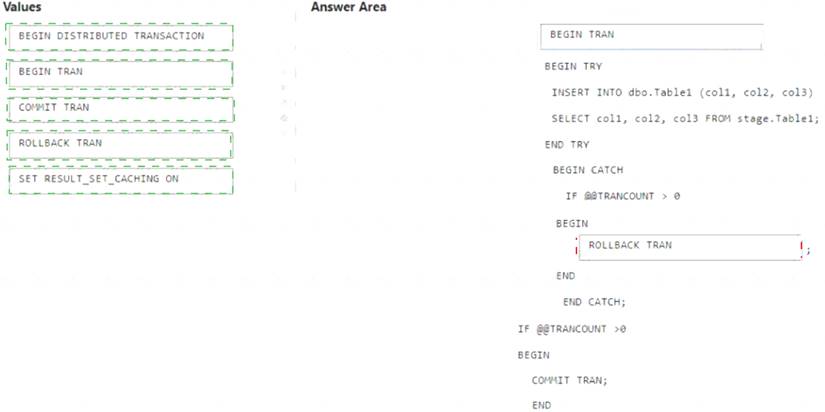

You are batch loading a table in an Azure Synapse Analytics dedicated SQL pool.

You need to load data from a staging table to the target table. The solution must ensure that if an error occurs while loading the data to the target table, all the inserts in that batch are undone.

How should you complete the Transact-SQL code? To answer, drag the appropriate values to the correct

targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE Each correct selection is worth one point.

Solution:

Does this meet the goal?

Correct Answer:

A

- (Exam Topic 3)

You are deploying a lake database by using an Azure Synapse database template.

You need to add additional tables to the database. The solution must use the same grouping method as the template tables.

‘Which grouping method should you use?

Correct Answer:

A

Business area: This is how the Azure Synapse database templates group tables by default. Each template consists of one or more enterprise templates that contain tables grouped by business areas. For example, the Retail template has business areas such as Customer, Product, Sales, and Store123. Using the same grouping method as the template tables can help you maintain consistency and compatibility with the industry-specific data model.

Business area: This is how the Azure Synapse database templates group tables by default. Each template consists of one or more enterprise templates that contain tables grouped by business areas. For example, the Retail template has business areas such as Customer, Product, Sales, and Store123. Using the same grouping method as the template tables can help you maintain consistency and compatibility with the industry-specific data model.

https://techcommunity.microsoft.com/t5/azure-synapse-analytics-blog/database-templates-in-azure-synapse-anal